Kubernetes Security: Monitor Audit logs with Grafana

Table of Contents

Monitoring Kubernetes audit logs plays an important role in strengthening the overall security posture of the infrastructure. By capturing and assessing the actions performed on the cluster, you can gain valuable insights into user activity, identify potential threats, and proactively mitigate security incidents.

Grafana and Loki, two open-source tools, offer a powerful and effective combination for monitoring Kubernetes audit logs. Grafana provides a user-friendly interface to visualize log data for monitoring and analysis, while Loki excels at storing and querying log streams efficiently.

In this article, you will learn how to enable audit logs in your Kubernetes cluster and set up monitoring using Grafana, Loki, and Promtail. You’ll also discover how to enable then send audit logs to Grafana for visualization and analysis.

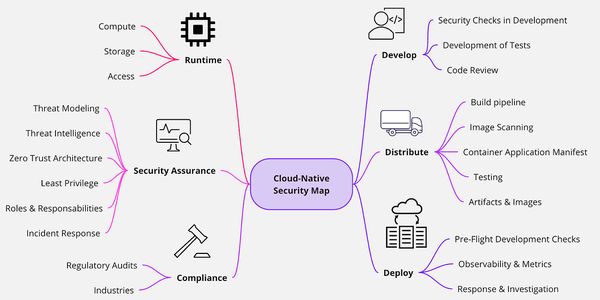

Why Audit Logs Monitoring

Audit logs monitoring serves as a critical component of Kubernetes security, offering several key benefits:

- Identifying Unauthorized Activity: Audit logs can reveal unauthorized access attempts, suspicious API calls, or attempts to modify critical system configurations.

- Detecting Resource Exploitation: Log analysis can uncover malicious activities such as privilege escalation, excessive resource consumption, or attempts to exfiltrate sensitive data.

- Enhancing Incident Response: By understanding the sequence of events leading to a security incident, administrators can swiftly identify the root cause and implement appropriate remediation measures.

- Compliance Auditing: Audit logs are essential for compliance with industry regulations and internal security policies.

Kubernetes audit logs are disabled by default, which means that Kubernetes does not record the actions that are performed on your cluster.

This can make it difficult to track down and identify security threats, such as unauthorized access or resource exploitation.

Grafana and Loki: A Unified Monitoring Solution

Grafana and Loki work seamlessly together to provide a comprehensive audit log monitoring solution:

- Loki as a Centralized Log Store: Loki stores audit logs from various sources within the Kubernetes cluster, including the API server, kubelet, and nodes.

- Promtail as a Log Collector: Promtail, a lightweight agent, collects audit logs from Kubernetes components and sends them to Loki for centralized storage.

- Grafana as a Log Analytics Platform: Grafana provides a rich charting and visualization interface for analyzing and interpreting audit log data.

Demonstration

In this tutorial, we will quickly bootstrap a security-monitored Kubernetes cluster using Kubernetes audit logging capability.

As mentioned above, Kubernetes audit logs are disabled by default. So, the first step is to fix this by enabling audit logs on our cluster.

Configure Kubernetes Audit Policy

We start by creating a new Kubernetes cluster using Minikube so that you can redo this tutorial and test the setup before trying it on a cloud deployed cluster.

$ minikube start

😄 minikube v1.32.0 on Darwin 14.2.1 (arm64)

✨ Using the docker driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

🐳 Preparing Kubernetes v1.28.3 on Docker 24.0.7 ...

🔗 Configuring bridge CNI (Container Networking Interface) ...

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🌟 Enabled addons: storage-provisioner, default-storageclass

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

Once the cluster started, we login into it with :minikube ssh so that we can configure the kube-apiserver with an audit-policy and tell the server how audit logs should be stored.

To enable audit logs on a our Minikube cluster, we need to :

- Configure Kube-apiserver:

Using the official kubernetes documentation as reference, we login into our minikube VM and configure the kube-apiserver as follow:

$ minikube ssh

# we create a backup copy of the kube-apiserver manifest file in case we mess things up 😄

docker@minikube:~$ sudo cp /etc/kubernetes/manifests/kube-apiserver.yaml .

# edit the file to add audit logs configurations

docker@minikube:~$ sudo vi /etc/kubernetes/manifests/kube-apiserver.yaml

First, instruct the kube-apiserver to start using an audit-policy that will define what logs we want to capture, then where to which file we want to send them. This is done by adding these two lines bellow the kube-apiserver command:

- command:

- kube-apiserver

\# add the following two lines

- --audit-policy-file=/etc/kubernetes/audit-policy.yaml

- --audit-log-path=/var/log/kubernetes/audit/audit.log

\# end

- --advertise-address=192.168.49.2

- --allow-privileged=true

- --authorization-mode=Node,RBAC

With both files, we need to configure the volumes and volumeMount into the kube-apiserver container.

Scroll down in the same file (kube-apiserver.yaml) and add these lines:

...

volumeMounts:

\- mountPath: /etc/kubernetes/audit-policy.yaml

name: audit

readOnly: true

\- mountPath: /var/log/kubernetes/audit/

name: audit-log

readOnly: false

And then:

...

volumes:

\- name: audit

hostPath:

path: /etc/kubernetes/audit-policy.yaml

type: File

\- name: audit-log

hostPath:

path: /var/log/kubernetes/audit/

type: DirectoryOrCreate

Be careful with the number of spaces you add before each line, this can prevent the kube-apiserver from starting.

However, at this point, even if you are super careful, it wont start… 😈

2. Create the audit-policy

This is because, we need to create the audit-policy file at the location we specified to the kube-apiserver. To keep things simple, we will use the audit-policy provided by the Kubernetes documentation.

Here is how:

docker@minikube:~$ cd /etc/kubernetes/

docker@minikube:~$ sudo curl -sLO https://raw.githubusercontent.com/kubernetes/website/main/content/en/examples/audit/audit-policy.yaml

Now, everything should be Ok for the kuber-apiserver pod to come back to a running state.

Let’s check:

docker@minikube:~$ exit

$ kubectl get po \-n kube\-system

NAME READY STATUS RESTARTS AGE

coredns\-5dd5756b68\-mbtm8 1/1 Running 0 1h

etcd\-minikube 1/1 Running 0 1h

kube\-apiserver\-minikube 1/1 Running 0 13s

kube\-controller\-manager\-minikube 1/1 Running 0 1h

kube\-proxy\-jcn6v 1/1 Running 0 1h

kube\-scheduler\-minikube 1/1 Running 0 1h

storage\-provisioner 1/1 Running 0 1h

3. Ensure that audit logs are being generated

Now, we can log back to the Minikube VM to check that our audit logs are correctly generated in the provided audit.log file.

$ minikube ssh

docker@minikube:~$ sudo cat /var/log/kubernetes/audit/audit.log

...

{"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata", ...

"authorization.k8s.io/reason":"RBAC:

allowed by ClusterRoleBinding \"system:public-info-viewer\" of ClusterRole

{"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Request", ...

...

Yes, we have our logs. 🥳

Now we need to setup the monitoring stack and push these logs to Loki/Grafana.

Deploying the monitoring stack

The stack we will be using consists of Grafana, Loki and Promtail.

First, we will use Promtail to push logs from within our cluster node to Loki.

Loki will aggregate these logs and store them for further analysis or audit needs.

Last Grafana will visualize these logs during assessments, investigations and potentially create alerts on some use cases.

Let’s Level-Up our game with Infrastructure as Code

Since I already published and article on how to setup a similar monitoring stack, you can refer to my previous article for more details. However, here is all the code you need to get rolling (who wants to read yet another article? right ?).

For now, just create a new file with the following content, use the .tf extension and run terraform apply in the same folder to let terraform do all the trick(s) for us:

# Initialize terraform providers

provider "kubernetes" {

config\_path = "~/.kube/config"

}

provider "helm" {

kubernetes {

config\_path = "~/.kube/config"

}

}

# Create a namespace for observability

resource "kubernetes\_namespace" "observability-namespace" {

metadata {

name = "observability"

}

}

# Helm chart for Grafana

resource "helm\_release" "grafana" {

name = "grafana"

repository = "https://grafana.github.io/helm-charts"

chart = "grafana"

version = "7.1.0"

namespace = "observability"

values = \[file("${path.module}/values/grafana.yaml")\]

depends\_on = \[kubernetes\_namespace.observability-namespace\]

}

# Helm chart for Loki

resource "helm_release" "loki" {

name = "loki"

repository = "https://grafana.github.io/helm-charts"

chart = "loki"

version = "5.41.5"

namespace = "observability"

values = [file("${path.module}/values/loki.yaml")]

depends_on = [kubernetes_namespace.observability-namespace]

}

# Helm chart for promtail

resource "helm_release" "promtail" {

name = "promtail"

repository = "https://grafana.github.io/helm-charts"

chart = "promtail"

version = "6.15.3"

namespace = "observability"

values = [file("${path.module}/values/promtail.yaml")]

depends_on = [kubernetes_namespace.observability-namespace]

}

For this script to run properly, you need to create a values folder along with the terraform file.

This folder will hold all our configuration for the stack to get the audit logs into Grafana.

Here is the content for each file:

values/grafana.yaml

persistence.enabled: true

persistence.size: 10Gi

persistence.existingClaim: grafana-pvc

persistence.accessModes[0]: ReadWriteOnce

persistence.storageClassName: standard

adminUser: admin

adminPassword: grafana

datasources:

datasources.yaml:

apiVersion: 1

datasources:

- name: Loki

type: loki

access: proxy

orgId: 1

url: http://loki-gateway.observability.svc.cluster.local

basicAuth: false

isDefault: true

version: 1

values/loki.yaml

loki:

auth_enabled: false

commonConfig:

replication_factor: 1

storage:

type: 'filesystem'

singleBinary:

replicas: 1

values/promtail.yaml

# Add Loki as a client to Promtail

config:

clients:

- url: http://loki-gateway.observability.svc.cluster.local/loki/api/v1/push

# Scraping kubernetes audit logs located in /var/log/kubernetes/audit/

snippets:

scrapeConfigs: |

- job_name: audit-logs

static_configs:

- targets:

- localhost

labels:

job: audit-logs

__path__: /var/log/host/kubernetes/**/*.log

Now, everything is setup for our monitoring stack to be able to ingest audit logs from our Kubernetes node.

We can log into our Grafana using the credentials configured in the value file above.

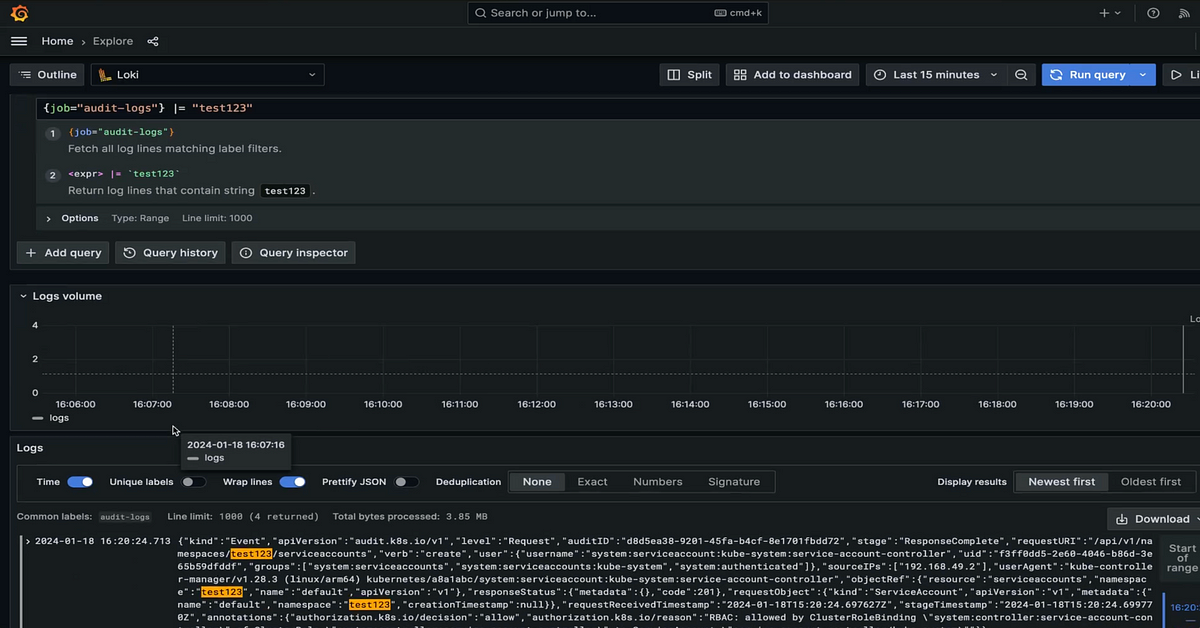

We head to the Explore tab and check our audit logs :

Now what ?

This is a first step of what needs to be done in order to breath more security into Kubernetes cluster.

In addition to the benefits listed at the begining of this article, audit logs can also help you:

- Verify user activity: verify that users are only accessing the resources they are authorized to access.

- Track changes to your cluster: such as the creation, deletion, or modification of resources. (Screenshot bellow)

- Resolve configuration problems: Audit logs can help you identify configuration problems that may be affecting your cluster.

Overall, audit logs are a valuable tool for securing your Kubernetes cluster and ensuring the integrity and confidentiality of your data.

By enabling audit logs and using acentralized log management tool, you can gain valuable insights into the activity on your cluster and take action to prevent and respond to security threats.